Resin Documentationhome company docs

app server

app server

clustering overview

Resin supports static and dynamic clusters with servers either defined in configuration files or added and removed as load changes. Its clustering supports both public and private cloud configurations. Clustering supports HTTP load balancing, distributed caching and sessions, distributed and deployment. To support the elastic-server cloud, Resin maintains the following when servers are added and removed:

In Resin, clustering is always enabled. Even if you only have a single server, that server belongs to its own cluster. As you add more servers, the servers are added to the cluster, and automatically gain benefits like clustered health monitoring, heartbeats, distributed management, triple redundancy, and distributed deployment. Resin clustering gives you:

To create a cluster, you add servers to the standard configuration files. Because its is built into the Resin's foundations, the servers benefit from clustering automatically. Example: sample resin.properties for a small cluster

...

app_servers : 192.168.1.10:6800 \

192.168.1.11:6801 \

192.168.1.12:6802 \

192.168.1.13:6803

...

While most web applications start their life-cycle being deployed to a single server, it eventually becomes necessary to add servers either for performance or for increased reliability. The single-server deployment model is very simple and certainly the one developers are most familiar with since single servers are usually used for development and testing (especially on a developer's local machine). As the usage of a web application grows beyond moderate numbers, the hardware limitations of a single machine simply is not enough (the problem is even more acute when you have more than one high-use application deployed to a single machine). The physical hardware limits of a server usually manifest themselves as chronic high CPU and memory usage despite reasonable performance tuning and hardware upgrades. Server load-balancing solves the scaling problem by letting you deploy a single web application to more than one physical machine. These machines can then be used to share the web application traffic, reducing the total workload on a single machine and providing better performance from the perspective of the user. We'll discuss Resin's load-balancing capabilities in greater detail shortly, but load-balancing is usually achieved by transparently redirecting network traffic across multiple machines at the systems level via a hardware or software load-balancer (both of which Resin supports). Load-balancing also increases the reliability/up-time of a system because even if one or more servers go down or are brought down for maintenance, other servers can still continue to handle traffic. With a single server application, any down-time is directly visible to the user, drastically decreasing reliability. If your application runs on multiple servers, Resin's clustered deployment helps you updating new versions of your application to all servers, and verify that all servers in the cluster are running the same version. When you deploy a new version, Resin ensures that all servers have an identical copy, validates the application, and then deploys it consistently. The clustered deployment is reliable across failures because its built on a transactional version control system (Git). After a new server starts or a failed server restarts, it checks with the redundant triad for the latest application version, copying it as necessary and deploying it. If web applications were entirely stateless, load-balancing alone would be sufficient in meeting all application server scalability needs. In reality, most web applications are relatively heavily stateful. Even very simple web applications use the HTTP session to keep track of current login, shopping-cart-like functionality and so on. Component oriented web frameworks like JSF and Wicket in particular tend to heavily use the HTTP session to achieve greater development abstraction. Maintaining application state is also very natural for the CDI (Resin CanDI) and Seam programming models with stateful, conversational components. When web applications are load-balanced, application state must somehow be shared across application servers. While in most cases you will likely be using a sticky session (discussed in detail shortly) to pin a session to a single server, sharing state is still important for fail-over. Fail-over is the process of seamlessly transferring over an existing session from one server to another when a load-balanced server fails or is taken down (probably for upgrade). Without fail-over, the user would lose a session when a load-balanced server experiences down-time. In order for fail-over to work, application state must be synchronized or replicated in real time across a set of load-balanced servers. This state synchronization or replication process is generally known as clustering. In a similar vein, the set of synchronized, load-balanced servers are collectively called a cluster. Resin supports robust clustering including persistent sessions, distributed sessions and dynamically adding/removing servers from a cluster (elastic/cloud computing). Based on the infrastructure required to support load-balancing, fail-over and clustering, Resin also has support for a distributed caching API, clustered application deployment as well as tools for administering the cluster. Resin lets you add and remove servers dynamically to the system, and automatically adjusts the cluster to manage those servers. When you add a new server, Resin:

Enabling dynamic servers in resin.propertiesTo enable dynamic servers, you can modify the resin.properties with three values: the triad hub servers for the cluster, enabling the elastic flag, and the cluster to join.

resin.properties to enable dynamic servers ... app_servers : 192.168.1.10:6800 elastic_cloud_enable : true home_cluster : app ... A similar pattern works for other clusters like the 'web' cluster. If you're enabling dynamic web servers, configure web_servers for the hub and home_cluster with 'web'. If you use a custom resin.xml configuration, you can add additional clusters following the same pattern. The standard resin.xml shows how the app_servers are defined. If you follow the same pattern, your custom 'mytier' cluster can use properties like mytier_servers and home_cluster 'mytier'. Starting an dynamic serverThe new server requires a copy of the site's resin.xml and resin.properties. In a cloud environment, you can clone the virtual machine with the properties preconfigured. At least one server must be configured in the hub, because the new server needs the IP address of the hub servers to join the cluster. The main operation is a "--elastic-server" which forces Resin to create a dynamic server, and "--cluster app-tier" which tells the new server which cluster to join. Example: minimal command-line for dynamic server unix> resinctl start --elastic-server --cluster app-tier As usual, you can name the server with "--server my-name". By default, a name is generated automatically. You can omit the --elastic-server and --cluster if you define the home_cluster and if the server is not one of the static servers. (Resin searches for local IP address in the addresses of the static servers if you don't specify a --server. If it finds a match, Resin will start it as a static server.) After the server shuts down, the cluster will remember it for 15 minutes, giving the server time to restart before removing it from the cluster. Because each organization has different deployment strategies, Resin provides several deployment techniques to match your internal deployment strategy:

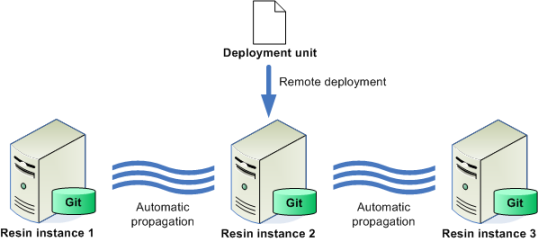

Resin clustered deployment synchronizes deployment across the cluster These features work much like session clustering in that applications are automatically replicated across the cluster. Because Resin cluster deployment is based on Git, it also allows for application versioning and staging across the cluster. The upload and copy method of deploying applications to the webapps file is always available. Although the upload and copy of application archives is common for single servers, it becomes more difficult to manage as your site scales to multiple servers. Along with the mechanics of copying the same file many times, you also need to consider the synchronization of applications across all the servers, making sure that each server has a complete, consistent copy. The benefits of using Resin's clustered deployment include:

As an example, the command-line deployment of a .war file to a running Resin instance looks like the following: unix> resinctl deploy test.war Deployed production/webapp/default/test from /home/caucho/ws/test.war to http://192.168.1.10:8080/hmtp Remote DeploymentIn order to use Resin clustered deployment from a remote system, you must first enable remote deployment on the server, which is disable by default for security. You do this using the following Resin configuration: resin.properties: Enabling Resin Clustered Deployment

remote_cli_enable : true

admin_user : my-admin

admin_password : {SSHA}G3UOLv0dkJHTZxAwmhrIC2CRBZ4VJgTB

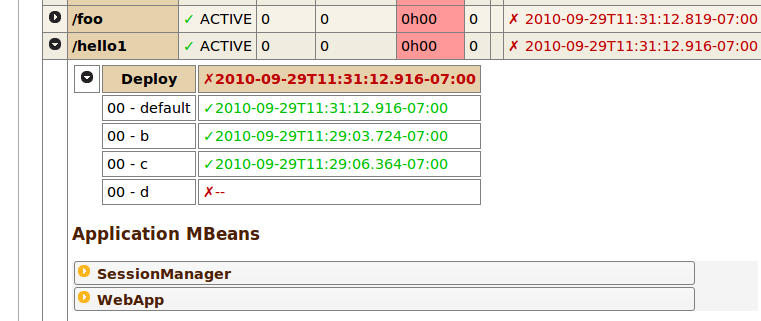

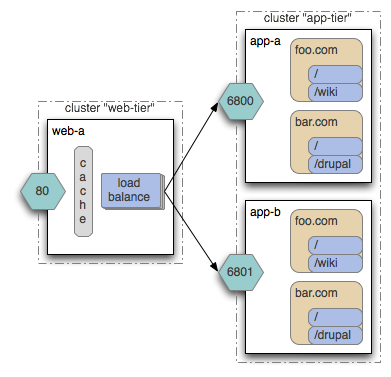

In the example above, both the remote admin service and the deployment service is enabled. Note, the admin authenticator must be enabled for any remote administration and deployment for obvious security reasons. To keep things simple, we used a clear-text password above, but you should likely use a password hash instead. When deploying remotely, you will need to include the user and password. unix> resinctl deploy --user my-admin --password my-password test.war Deployed production/webapp/default/test from /home/caucho/ws/test.war to http://192.168.1.10:8080/hmtp Deployment details and architectureThe output exposes a few important details about the underlying remote deployment implementation for Resin that you should understand. Remote deployment for Resin uses Git under the hood. In case you are not familiar with it, Git is a newish version control system similar to Subversion. A great feature of Git is that it is really clever about avoiding redundancy and synchronizing data across a network. Under the hood, Resin stores deployed files as nodes in Git with tags representing the type of file, development stage, virtual host, web application context root name and version. The format used is this: stage/type/host/webapp[-version] In the example, all web applications are stored under , we didn't specify a stage or virtual host in the Ant task so is used, the web application root is foo and no version is used since one was not specified. This format is key to the versioning and staging featured we will discuss shortly. As soon as your web application is uploaded to the Resin deployment repository, it is propagated to all the servers in the cluster - including any dynamic servers that are added to the cluster at a later point in time after initial propagation happens.  When you deploy an application, it's always a good idea to check that all servers in the cluster have received the correct web-app. Because Resin's deployment is based on a transactional version-control system, you can compare the exact version across all servers in the cluster using the /resin-admin web-app page. The following screenshot shows the /hello1 webapp deployed across the cluster using a .war deployment in the webapps/ directory. Each version (date) is in green to indicate that the hash validation matches for each server. If one server had a different content, the date would be marked with a red 'x'.  The details for each Ant and Maven based clustered deployment API is outlined here. Once your traffic increases beyond the capacity of a single application server, your site needs to add a HTTP load balancer as well as the second application server. Your system is now a two tier system: an app-tier with your program and a web-tier for HTTP load-balancing and HTTP proxy caching. Since the load-balancing web-tier is focusing on HTTP and proxy caching, you can usually handle many app-tier servers with a single web-tier load balancer. Although the largest sites will pay for an expensive hardware load balancer, medium and small sites can use Resin's built-in load-balancer as a cost-effective and efficient way to scale a moderate number of servers, while gaining the performance advantage of Resin's HTTP proxy caching. A load balanced system has three main requirements: define the servers to use as the application-tier, define the servers in the web-tier, and select which requests are forwarded (proxied) to the backend app-tier servers. Since Resin's clustering configuration already defines the servers in a cluster, the only additional configuration is to use the <resin:LoadBalance> tag to forward request to the backend.  resin.properties load balancing configurationFor load balancing with the standard resin.xml and resin.properties, the web_servers defines the static servers for the web (load-balancing) tier, and the app_servers defines the static servers for the application (backend) tier. The standard resin.xml configuration will proxy requests from the web tier to the app tier. resin.properties: load balancing

web_servers : 192.168.1.20:6820

web.http : 80

app_servers : 192.168.1.10:6820 \

192.168.1.11:6820 \

192.168.1.22:6820

app.http : 8080

resin.xml load balancing configurationDispatching requests with load balancing in Resin is accomplished through the <resin:LoadBalance> tag placed on a cluster. In effect, the <resin:LoadBalance> tag turns a set of servers in a cluster into a software load-balancer. This makes a lot of sense in light of the fact that as the traffic on your application requires multiple servers, your site will naturally be split into two tiers: an application server tier for running your web applications and a web/HTTP server tier talking to end-point browsers, caching static/non-static content, and distributing load across servers in the application server tier. The best way to understand how this works is through a simple example configuration. The following resin.xml configuration shows servers split into two clusters: a web-tier for load balancing and HTTP, and an app-tier to process the application: Example: resin.xml for Load-Balancing

<resin xmlns="http://caucho.com/ns/resin"

xmlns:resin="urn:java:com.caucho.resin">

<cluster-default>

<resin:import path="${__DIR__}/app-default.xml"/>

</cluster-default>

<cluster id="app-tier">

<server id="app-a" address="192.168.0.10" port="6800"/>

<server id="app-b" address="192.168.0.11" port="6800"/>

<host id="">

<web-app id="" root-directory="/var/resin/htdocs"/>

</host>

</cluster>

<cluster id="web-tier">

<server id="web-a" address="192.168.0.1" port="6800">

<http port="80"/>

</server>

<proxy-cache memory-size="256M"/>

<host id="">

<resin:LoadBalance regexp="" cluster="app-tier"/>

</host>

</cluster>

</resin>

In the configuration above, the cluster server load balances across the cluster servers because of the attribute specified in the <resin:LoadBalance> tag. The <proxy-cache> tag enables proxy caching at the web tier. The web-tier forwards requests evenly and skips any app-tier server that's down for maintenance/upgrade or restarting due to a crash. The load balancer also steers traffic from a single session to the same app-tier server (a.k.a sticky sessions), improving caching and session performance. Each app-tier server produces the same application because they have the same virtual hosts, web-applications and Servlets, and use the same resin.xml. Adding a new machine just requires adding a new <server> tag to the cluster. Each server has a unique name like "app-b" and a TCP cluster-port consisting of an <IP,port>, so the other servers can communicate with it. Although you can start multiple Resin servers on the same machine, TCP requires the <IP,port> to be unique, so you might need to assign unique ports like 6801, 6802 for servers on the same machine. On different machines, you'll use unique IP addresses. Because the cluster-port is for Resin servers to communicate with each other, they'll typically be private IP addresses like 192.168.1.10 and not public IP addresses. In particular, the load balancer on the web-tier uses the cluster-port of the app-tier to forward HTTP requests. When no explicit server is specified, Resin will search for local IP addresses that match the configured servers and start all of them. This local IP searching lets Resin's service start script work without modification. When dynamic servers are enabled, and no local server matches, Resin will start a new dynamic server to join the home_cluster cluster. Starting Local Servers unix> resinctl start-all All three servers will use the same resin.xml, which makes managing multiple server configurations pretty easy. The servers are named by the server attribute, which must be unique, just as the <IP,port>. When you start a Resin instance, you'll use the server-id as part of the command line: Starting Explicit Servers 192.168.0.10> resinctl -server app-a start 192.168.0.11> resinctl -server app-b start 192.168.0.1> resinctl -server web-a start Since Resin lets you start multiple servers on the same machine, a small site might start the web-tier server and one of the app-tier servers on one machine, and start the second server on a second machine. You can even start all three servers on the same machine, increasing reliability and easing maintenance, without addressing the additional load (although it will still be problematic if the physical machine itself and not just the JVM crashes). If you do put multiple servers on the same machine, remember to change the to something like 6801, etc so the TCP binds do not conflict. In the /resin-admin management page, you can manage all three servers at once, gathering statistics/load and watching for any errors. When setting up /resin-admin on a web-tier server, you'll want to remember to add a separate <web-app> for resin-admin to make sure the <rewrite-dispatch> doesn't inadvertantly send the management request to the app-tier. Sticky/Persistent SessionsTo understand what sticky sessions are and why they are important, it is easiest to see what will happen without them. Let us take our previous example and assume that the web tier distributes sessions across the application tier in a totally random fashion. Recall that while Resin can replicate the HTTP session across the cluster, it does not do so by default. Now lets assume that the first request to the web application resolves to app-a and results in the login being stored in the session. If the load-balancer distributes sessions randomly, there is no guarantee that the next request will come to app-a. If it goes to app-b, that server instance will have no knowledge of the login and will start a fresh session, forcing the user to login again. The same issue would be repeated as the user continues to use the application from in what their view should be a single session with well-defined state, making for a pretty confusing experience with application state randomly getting lost! One way to solve this issue would be to fully synchronize the session across the load-balanced servers. Resin does support this through its clustering features (which is discussed in detail in the following sections). The problem is that the cost of continuously doing this synchronization across the entire cluster is very high and relatively unnecessary. This is where sticky sessions come in. With sticky sessions, the load-balancer makes sure that once a session is started, any subsequent requests from the client go to the same server where the session resides. By default, the Resin load-balancer enforces sticky sessions, so you don't really need to do anything to enable it. Resin accomplishes this by encoding the session cookie with the host name that started the session. Using our example, the hosts would generate cookies like this:

On the web-tier, Resin decoded the session cookie and sends it to the appropriate server. So will go to app-b. If app-b fails or is down for maintenance, Resin will send the request a backup server calculated from the session id, in this case app-a. Because the session is not clustered, the user will lose the session but they won't see a connection failure (to see how to avoid losing the session, check out the following section on clustering). Manually Choosing a ServerFor testing purposes you might want to send requests to a specific servers in the app-tier manually. You can easily do this since the web-tier uses the value of the to maintain sticky sessions. You can include an explicit to force the web-tier to use a particular server in the app-tier. Since Resin uses the first character of the to identify the server to use, starting with 'a' will resolve the request to app-a. If www.example.com resolves to your web-tier, then you can use values like this for testing:

You can also use this fact to configure an external sticky load-balancer (likely a high-performance hardware load-balancer) and eliminate the web tier altogether. In this case, this is how the Resin configuration might look like: resin.xml with Hardware Load-Balancer

<resin xmlns="http://caucho.com/ns/resin">

<cluster id="app-tier">

<server-default>

<http port='80'/>

</server-default>

<server id='app-a' address='192.168.0.1' port="6800"/>

<server id='app-b' address='192.168.0.2' port="6800"/>

<server id='app-c' address='192.168.0.3' port="6800"/>

</cluster>

</resin>

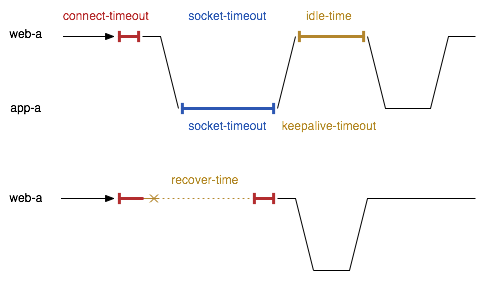

Each server will be started as , , etc to grab its specific configuration. Socket Pooling, Timeouts, and FailoverFor efficiency, Resin's load balancer manages a pool of sockets connecting to the app-tier servers. If Resin forwards a new request to an app-tier server and it has an idle socket available in the pool, it will reuse that socket, improving performance and minimizing network load. Resin uses a set of timeout values to manage the socket pool and to handle any failures or freezes of the backend servers. The following diagram illustrates the main timeout values:

When an app-tier server is down due to maintenance or a crash, Resin will use the load-balance-recover-time as a delay before retrying the downed server. With the failover and recover timeout, the load balancer reduces the cost of a failed server to almost no time at all. Every recover-time, Resin will try a new connection and wait for load-balance-connect-timeout for the server to respond. At most, one request every 15 seconds might wait an extra 5 seconds to connect to the backend server. All other requests will automatically go to the other servers. The socket-timeout values tell Resin when a socket connection is dead and should be dropped. The web-tier timeout load-balance-socket-timeout is much larger than the app-tier timeout socket-timeout because the web-tier needs to wait for the application to generate the response. If your application has some very slow pages, like a complicated nightly report, you may need to increase the load-balance-socket-timeout to avoid the web-tier disconnecting it. Likewise, the load-balance-idle-time and keepalive-timeout are a matching pair for the socket idle pool. The idle-time tells the web-tier how long it can keep an idle socket before closing it. The keepalive-timeout tells the app-tier how long it should listen for new requests on the socket. The keepalive-timeout must be significantly larger than the load-balance-idle-time so the app-tier doesn't close its sockets too soon. The keepalive timeout can be large since the app-tier can use the keepalive-select manager to efficiently wait for many connections at once. resin:LoadBalance Dispatching<resin:LoadBalance> is part of Resin's rewrite capabilities, Resin's equivalent of the Apache mod_rewrite module, providing powerful and detailed URL matching and decoding. More sophisticated sites might load-balance based on the virtual host or URL using multiple <resin:LoadBalance> tags. In most cases, the web-tier will dispatch everything to the app-tier servers. Because of Resin's proxy cache, the web-tier servers will serve static pages as fast as if they were local pages. In some cases, though, it may be important to send different requests to different backend clusters. The <resin:LoadBalance> tag can choose clusters based on URL patterns when such capabilities are needed. The following rewrite example keeps all *.png, *.gif, and *.jpg files on the web-tier, sends everything in /foo/* to the foo-tier cluster, everything in /bar/* to the bar-tier cluster, and keeps anything else on the web-tier. Example: resin.xml Split Dispatching

<resin xmlns="http://caucho.com/ns/resin"

xmlns:resin="urn:java:com.caucho.resin">

<cluster-default>

<resin:import path="classpath:META-INF/caucho/app-default.xml"/>

</cluster-default>

<cluster id="web-tier">

<server id="web-a">

<http port="80"/>

</server>

<proxy-cache memory-size="64m"/>

<host id="">

<web-app id="/">

<resin:Dispatch regexp="(\.png|\.gif|\.jpg)"/>

<resin:LoadBalance regexp="^/foo" cluster="foo-tier"/>

<resin:LoadBalance regexp="^/bar" cluster="bar-tier"/>

</web-app>

</host>

</cluster>

<cluster id="foo-tier">

...

</cluster>

<cluster id="bar-tier">

...

</cluster>

</resin>

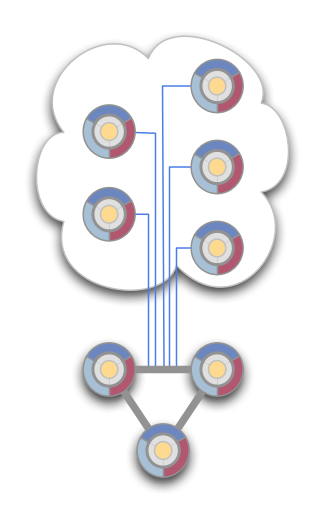

For details on the tags used for clustering, please refer to this page. After many years of developing Resin's distributed clustering for a wide variety of user configurations, we refined our clustering network to a hub-and-spoke model using a triple-redundant triad of servers as the cluster hub. The triad model provides interlocking benefits for the dynamically scaling server configurations people are using.

Cluster HeartbeatAs part of Resin's health check system, the cluster continually checks that all servers are alive and responding properly. Every 60 seconds, each server sends a heartbeat to the triad, and each triad server sends a heartbeat to all the other servers. When a server failure is detected, Resin immediately detects the failure, logging it for an administrator's analysis and internally prepares to failover to backup servers for any messaging for distributed storage like the clustered sessions and the clustered deployment. When the server comes back up, the heartbeat is reestablished and any missing data is recovered. Because load balancing, maintenance and failover should be invisible to your users, Resin can replicate user's session data across the cluster. When the load-balancer fails over a request to a backup server, or when you dynamically remove a server, the backup can grab the session and continue processing. From the user's perspective, nothing has changed. To make this process fast and reliable, Resin uses the triad servers as a triplicate backup for the user's session. The following is a simple example of how to enable session clustering for all web-apps using the standard resin.properties: Example: resin.properties enabling clustered sessions ... session_store : true ... If you want to configure persistent sessions for a specific web-app, you can set the use-persistent-store attribute of the <session-config>. Example: resin-web.xml enabling clustered sessions

<web-app xmlns="http://caucho.com/ns/resin">

<session-config>

<use-persistent-store="true"/>

</session-config>

</web-app>

The Example: Clustering for All Web Applications

<resin xmlns="http://caucho.com/ns/resin">

<cluster>

<server id="a" address="192.168.0.1" port="6800"/>

<server id="b" address="192.168.0.2" port="6800"/>

<web-app-default>

<session-config use-persistent-store="true"/>

</web-app-default>

</cluster>

</resin>

The above example also shows how you can override the clustering behaviour for Resin. By default, Resin uses a triad based replication with all three triad servers backing up the data server. THe <persistent-store type="cluster"> has a number of other attributes:

The cluster store is valuable for single-server configurations, because Resin stores a copy of the session data on disk, allowing for recovery after system restart. This is basically identical to the file persistence feature discussed below. always-save-sessionResin's distributed sessions need to know when a session has changed in order to save/synchronize the new session value. Although Resin does detect when an application calls , it can't tell if an internal session value has changed. The following Counter class shows the issue: Counter.java

package test;

public class Counter implements java.io.Serializable {

private int _count;

public int nextCount() { return _count++; }

}

Assuming a copy of the Counter is saved as a session attribute, Resin doesn't know if the application has called . If it can't detect a change, Resin will not backup/synchronize the new session, unless is set on the <session-config>. When is true, Resin will back up the entire session at the end of every request. Otherwise, a session is only changed when a change is detected. ... <web-app id="/foo"> ... <session-config> <use-persistent-store/> <always-save-session/> </session-config> ... </web-app> SerializationResin's distributed sessions relies on Hessian serialization to save and restore sessions. Application objects must implement for distributed sessions to work. No Distributed LockingResin's clustering does not lock sessions for replication. For browser-based sessions, only one request will typically execute at a time. Because browser sessions have no concurrency, there's really no need for distributed locking. However, it's a good idea to be aware of the lack of distributed locking in Resin clustering. For details on the tags used for clustering, please refer to this page. javadoc <resin:ClusterCache>

Distributed caching is a useful technique to reduce database load and increase application performance. Resin provides a javax.cache.Cache (JSR-107 JCache) based distributed caching API. Distributed caching in Resin uses the underlying infrastructure used to support load balancing, clustering and session/state replication.

resin-web.xml: Configuring Resin Distributed Cache

<web-app xmlns="http://caucho.com/ns/resin"

xmlns:distcache="urn:java:com.caucho.distcache">

<distcache:ClusterCache ee:Named="myCacheEE">

<name>myCache</name>

</distcache:ClusterCache>>

</web-app>

You may then inject the cache through CDI and use it in your application via the JCache API: Using Resin Distributed Cache

import javax.cache.*;

import javax.inject.*;

public class MyService

{

@Inject @Named("myCacheEE")

private Cache<String,String> _cache;

public void test()

{

_cache.putIfAbsent("foo", "bar");

}

}

@CacheResult@CacheResult enables caching for method results. It uses the method parameters as a cache key, and stores the method result in the cache. On the next method call, the enhanced method will look for the saved result in the cache, and return it, saving the effort of the method.

WEB-INF/beans.xml (to enable CDI scanning) <beans/> MyBean.java

package org.example.mypkg;

import javax.cache.annotation.CacheResult;

public class MyBean {

@CacheResult

public String doLongOperation(String key)

{

...

}

}

MyServlet.java

package org.example.mypkg;

import javax.cache.annotation.CacheResult;

public class MyServlet extends GenericServlet {

@Inject MyBean _bean;

public void service(ServletRequest req, ServletResponse res)

throws IOException, ServletException

{

PrintWriter out = res.getWriter();

String result = _bean.doLongOperation("test");

out.println("test: " + result);

}

}

|